Last week, Coinbase got hacked.

Hackers demanded a $20 million ransom after breaching a third-party system. They didn’t get passwords or crypto keys. But what they did get will put lives at risk:

- Names

- Home addresses

- Phone numbers

- Partial Social Security numbers

- Identity documents

- Bank info

That’s everything someone needs to impersonate you, blackmail you, or show up at your front door.

This isn't hypothetical. There’s a growing wave of kidnappings and extortion targeting people with crypto exposure. Criminals are using leaked identity data to find victims and hold them hostage.

Let’s be clear: KYC doesn’t just put your data at risk. It puts people at risk.

Naturally, people are furious at any company that leaks their information.

But here’s the bigger issue:

No system is unhackable.

Every major institution, from the IRS to the State Department, has suffered breaches.

Protecting sensitive data at scale is nearly impossible.

And Coinbase didn’t want to collect this data.

Many companies don’t. It’s a massive liability.

They’re forced to, by law.

A new, dangerous normal

KYC, Know Your Customer, has become just another box to check.

Open a bank account? Upload your ID.

Use a crypto exchange? Add your selfie and utility bill.

Sign up for a payment app? Same thing.

But it wasn’t always this way.

Until the 1970s, you could walk into a bank with cash and open an account. Your financial life was private by default.

That changed with the Bank Secrecy Act of 1970, which required banks to start collecting and reporting customer activity to the government. Still, KYC wasn’t yet formalized. Each bank decided how well they needed to know someone. If you’d been a customer since childhood, or had a family member vouch for you, that was often enough.

Then came the Patriot Act, which turned KYC into law. It required every financial institution to collect, verify, and store identity documents from every customer, not just for large or suspicious transactions, but for basic access to the financial system.

From that point on, privacy wasn’t the default. It was erased.

The real-world cost

Today, everyone is surveilled all the time.

We’ve built an identity dragnet, and people are being hurt because of it.

Criminals use leaked KYC data to find and target people, and it’s not just millionaires. It’s regular people, and sometimes their parents, partners, or even children.

It’s happened in London, Buenos Aires, Dubai, Lagos, Los Angeles, all over the world.

Some are robbed. Some are held for ransom.

Some don’t survive.

- In Kyiv, a Moroccan man was kidnapped, forced to transfer $170,000 in crypto, and murdered.

- In Montreal, 25-year-old crypto influencer Kevin Mirshahi was kidnapped and killed. His body was found months later.

These aren’t edge cases. They’re the direct result of forcing companies to collect and store sensitive personal data.

When we force companies to hoard identity data, we guarantee it will eventually fall into the wrong hands.

"There are two types of companies, those that have been hacked, and those that don’t yet know they’ve been hacked" - former Cisco CEO, John Chambers

What KYC actually does

KYC turns every financial institution into a surveillance node.

It turns your personal information into a liability.

It doesn’t just increase risk -- It creates it.

KYC is part of a global surveillance infrastructure. It feeds into databases governments share and query without your knowledge. It creates chokepoints where access to basic services depends on surrendering your privacy. And it deputizes companies to collect and hold sensitive data they never wanted.

If you’re trying to rob a vault, you go where the gold is.

If you’re trying to target people, you go where the data lives.

KYC creates those vaults, legally mandated, poorly secured, and irresistible to attackers.

Does it even work?

We’re told KYC is necessary to stop terrorism and money laundering.

But the top reasons banks file “suspicious activity reports” are banal, like someone withdrawing “too much” of their own money.

We’re told to accept this surveillance because it might stop a bad actor someday.

In practice, it does more to expose innocent people than to catch criminals.

KYC doesn’t prevent crime.

It creates the conditions for it.

A Better Path Exists

We don’t have to live like this.

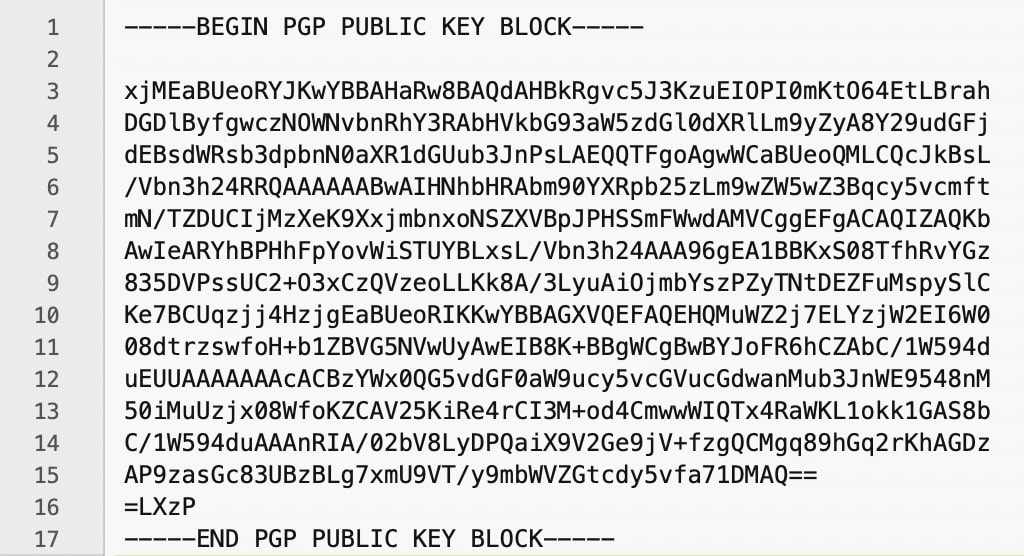

Better tools already exist, tools that allow verification without surveillance:

- Zero-Knowledge Proofs (ZKPs): Prove something (like your age or citizenship) without revealing documents

- Decentralized Identity (DID): You control what gets shared, and with whom

- Homomorphic Encryption: Allows platforms to verify encrypted data without ever seeing it

But maybe it’s time to question something deeper.

Why is centralized, government-mandated identity collection the foundation of participation in financial life?

This surveillance regime didn’t always exist. It was built.

And just because it’s now common doesn’t mean we should accept it.

We didn’t need it before. We don’t need it now.

It’s time to stop normalizing mass surveillance as a condition for basic financial access.

The system isn’t protecting us.

It’s putting us in danger.

It’s time to say what no one else will

KYC isn’t a necessary evil.

It’s the original sin of financial surveillance.

It’s not a flaw in the system.

It is the system.

And the system needs to go.

Takeaways

- Check https://HaveIBeenPwned.com to see how much of your identity is already exposed

- Say no to services that hoard sensitive data

- Support better alternatives that treat privacy as a baseline, not an afterthought

Because safety doesn’t come from handing over more information.

It comes from building systems that never need it in the first place.

Yours in privacy,

Naomi