The healthcare sector is one of the biggest targets for cyberattacks—and it's only getting worse.

Every breach spills sensitive information—names, medical histories, insurance details, even Social Security numbers. But this time, it wasn’t hackers breaking down the doors.

It was Blue Shield of California leaving the front gate wide open.

Between April 2021 and January 2024, Blue Shield exposed the health data of 4.7 million members by misconfiguring Google Analytics on its websites. That’s right—your protected health information was quietly piped to Google’s advertising systems.

Let’s break down what was shared:

- Your insurance plan name and group number

- Your ZIP code, gender, family size

- Patient names, financial responsibility, and medical claim service dates

- “Find a Doctor” searches—including provider names and types

- Internal Blue Shield account identifiers

They didn’t just leak names. They leaked context. The kind of data that paints a detailed picture of your life.

And what's worse—most people have become so numb to these data breaches that the most common response is “Why should I care?”

Let’s break it down.

1. Health data is deeply personal

This isn’t just a password or an email leak. This is your health. Your body. Your medical history. Maybe your therapist. Maybe a cancer screening. Maybe reproductive care.

This is the kind of stuff people don’t even tell their closest friends. Now imagine it flowing into a global ad system run by one of the biggest surveillance companies on earth.

Once shared, you don’t get to reel it back in. That vulnerability sticks.

2. Your family’s privacy is at risk—even if it was your data

Health information doesn’t exist in a vacuum. A diagnosis on your record might reveal a hereditary condition your children could carry. A test result might imply something about your partner. An STD might not just be your business.

This breach isn’t just about people directly listed on your health plan—it’s about your entire household being exposed by association. When sensitive medical data is shared without consent, it compromises more than your own privacy. It compromises your family’s.

3. Your insurance rates could be affected—without your knowledge

Health insurers already buy data from brokers to assess risk profiles. They don’t need your full medical chart to make decisions—they just need signals: a recent claim, a high-cost provider, a chronic condition inferred from your search history or purchases.

Leaks like this feed that ecosystem.

Even if the data is incomplete or inaccurate, it can still be used to justify higher premiums—or deny you coverage entirely. And good luck challenging that decision. The burden of proof rarely falls on the companies profiling you. It falls on you.

4. Leaked health data fuels exploitative advertising

When companies know which providers you’ve visited, which symptoms you searched, or what procedures you recently underwent, it gives advertisers a disturbingly precise psychological profile.

This kind of data isn’t used to help you—it’s used to sell to you.

You might start seeing ads for drugs, miracle cures, or dubious treatments. You may be targeted with fear-based campaigns designed to exploit your pain, anxiety, or uncertainty. And it can all feel eerily personal—because it is.

This is surveillance operating in a very predatory form. In recent years, the FTC has cracked down on companies like BetterHelp and GoodRx for leaking health data to Facebook and Google to power advertising algorithms.

This breach could be yet another entry in the growing pattern of companies exploiting your data to target you.

5. It’s a goldmine for hackers running spear phishing campaigns

Hackers don’t need much to trick you into clicking a malicious link. But when they know:

- Your doctor’s name

- The date you received care

- How much you owed

- Your exact insurance plan and member ID

…it becomes trivially easy to impersonate your provider or insurance company.

You get a message that looks official. It references a real event in your life. You click. You log in. You enter your bank info.

And your accounts are drained before you even realize what happened.

6. You can’t predict how this data will be used—and that’s the problem

We tend to underestimate the power of data until it’s too late. It feels abstract. It doesn’t hurt.

But data accumulates. It’s cross-referenced. Sold. Repackaged. Used in ways you’ll never be told—until you're denied a loan, nudged during an election, or flagged as a potential problem.

The point isn’t to predict every worst-case scenario. It’s that you shouldn’t have to. You should have the right to withhold your data in the first place.

Takeaways

The threat isn’t always a hacker in a hoodie. Sometimes it’s a quiet decision in a California boardroom that compromises millions of people at once.

We don’t get to choose when our data becomes dangerous. That choice is often made for us—by corporations we didn’t elect, using systems we can’t inspect, in a market that treats our lives as inventory.

But here’s what we can do:

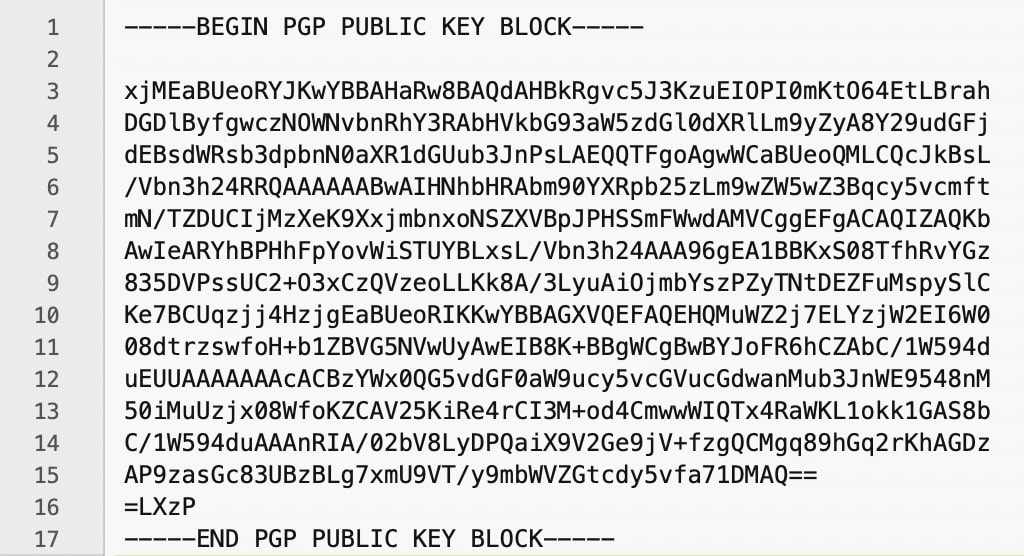

- Choose tools that don’t monetize your data. Every privacy-respecting service you use sends a signal.

- Push for legislation that treats data like what it is—power. Demand the right to say no.

- Educate others. Most people still don’t realize how broken the system is. Be the reason someone starts paying attention.

- Support organizations building a different future. Privacy won’t win by accident. It takes all of us.

Control over your data is control over your future—and while that control is slipping, we’re not powerless.

We can’t keep waiting for the next breach to "wake people up." Let this be the one that shifts the tide.

Privacy isn’t about secrecy. It’s about consent. And you never consented to this.

So yes, you should care. Because when your health data is treated as a business asset instead of a human right, no one is safe—unless we fight back.

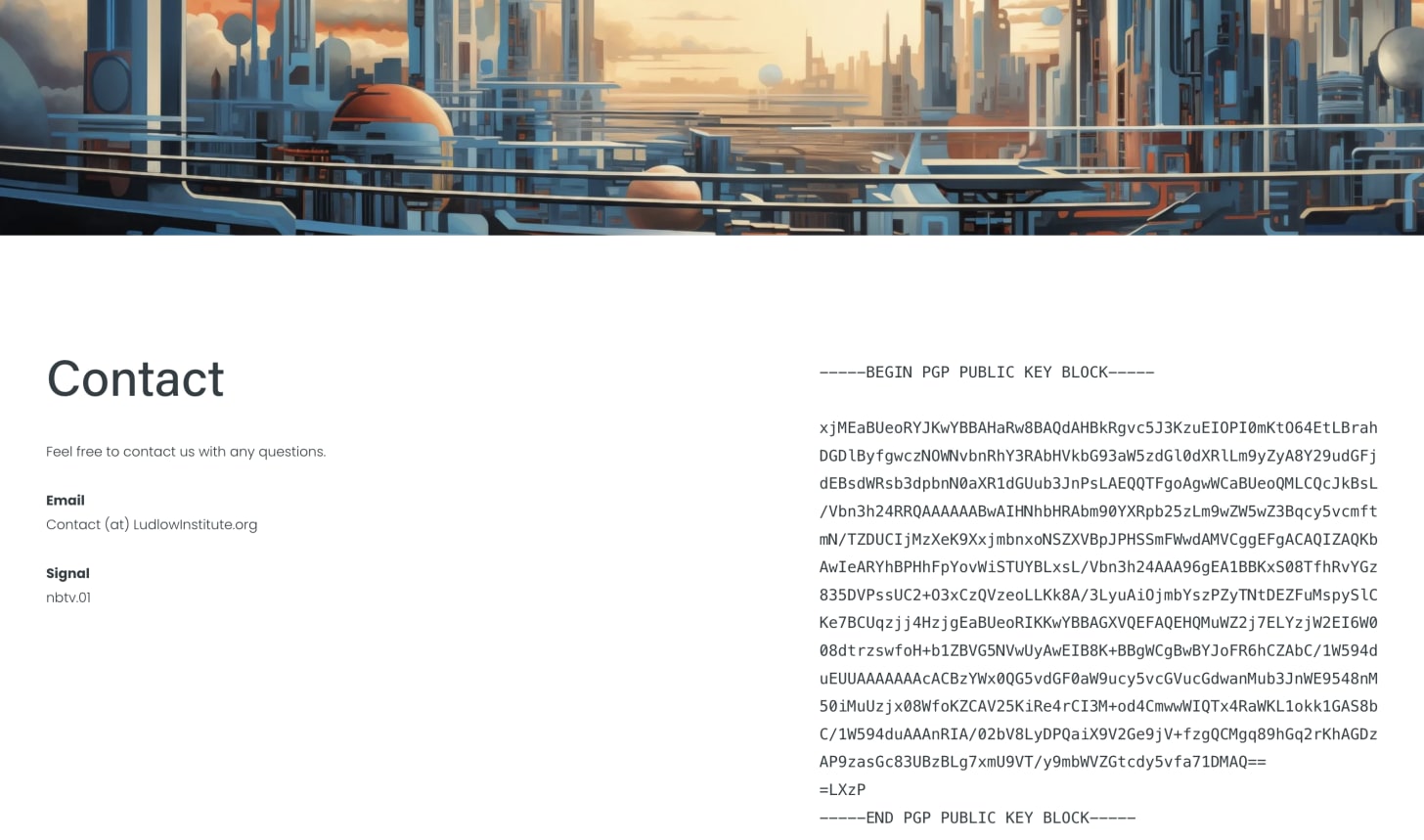

Yours in privacy,

Naomi