A member of our NBTV members’ chat recently shared something with us after a visit to her doctor.

She’d just gotten back from an appointment and felt really shaken up. Not because of a diagnosis, she was shaken because she realized just how little control she had over her personal information.

It started right at check-in, before she’d even seen the doctor.

Weight. Height. Blood pressure. Lifestyle habits. Do you drink alcohol? Are you depressed? Are you sexually active?

All the usual intake questions.

It all felt deeply personal, but this kind of data collection is normal now.

Yet she couldn’t help but wonder: shouldn't they ask why she’s there first? How can they know what information is actually relevant without knowing the reason for the visit? Why collect everything upfront, without context?

She answered every question anyway. Because pushing back makes people uncomfortable.

Finally, she was through with the medical assistant’s questions and taken to the actual doctor. That’s when she confided something personal, something she felt was important for the doctor to know, but made a simple request:

"Please don’t record that in my file".

The doctor responded:

"Well, this is something I need to know".

She replied:

"Yes, that’s why I told you. But I don’t want it written down. That file gets shared with who knows how many people".

The doctor paused, then said:

"I’m going to write it in anyway".

And just like that, her sensitive information, something she explicitly asked to keep off the record, became part of a permanent digital file.

That quiet moment said everything. Not just about one doctor, but about a system that no longer treats medical information as something you control. Because once something is entered into your electronic health record, it’s out of your hands.

You can’t delete it.

You can’t restrict who sees it.

She Said "Don’t Write That Down." The Doctor Did Anyway.

Financially incentivized to collect your data

The digital device that the medical assistant and doctor write your information into is called an Electronic Health Record (EHR). EHRs aren’t just a digital version of your paper file. They’re part of a government-mandated system. Through legislation and financial incentives from the HHS, clinics and hospitals were required to digitize patient data.

On top of that, medical providers are required to prove what’s called “Meaningful Use” of these EHR systems. Unless they can prove meaningful use, the medical provider won’t get their Medicare and Medicaid rebates. So when you're asked about your blood pressure, your weight, and your alcohol use, it’s part of a quota. There’s a financial incentive to collect your data, even if it’s not directly related to your care. These financial incentives reward over-collection and over-documentation. There are no incentives for respecting your boundaries.

You’re not just talking to your doctor. You’re talking to the system

Most people have no idea how medical records actually work in the US They assume that what they tell a doctor stays between the two of them.

That’s not how it works.

In the United States, HIPAA states that your personally identifiable medical data can be shared, without needing to get your permission first, for a wide range of “healthcare operations” purposes.

Sounds innocuous enough. But the definition of health care operations is almost 400 words long. It’s essentially a list of about 65 non-clinical business activities that have nothing to do with your medical treatment whatsoever.

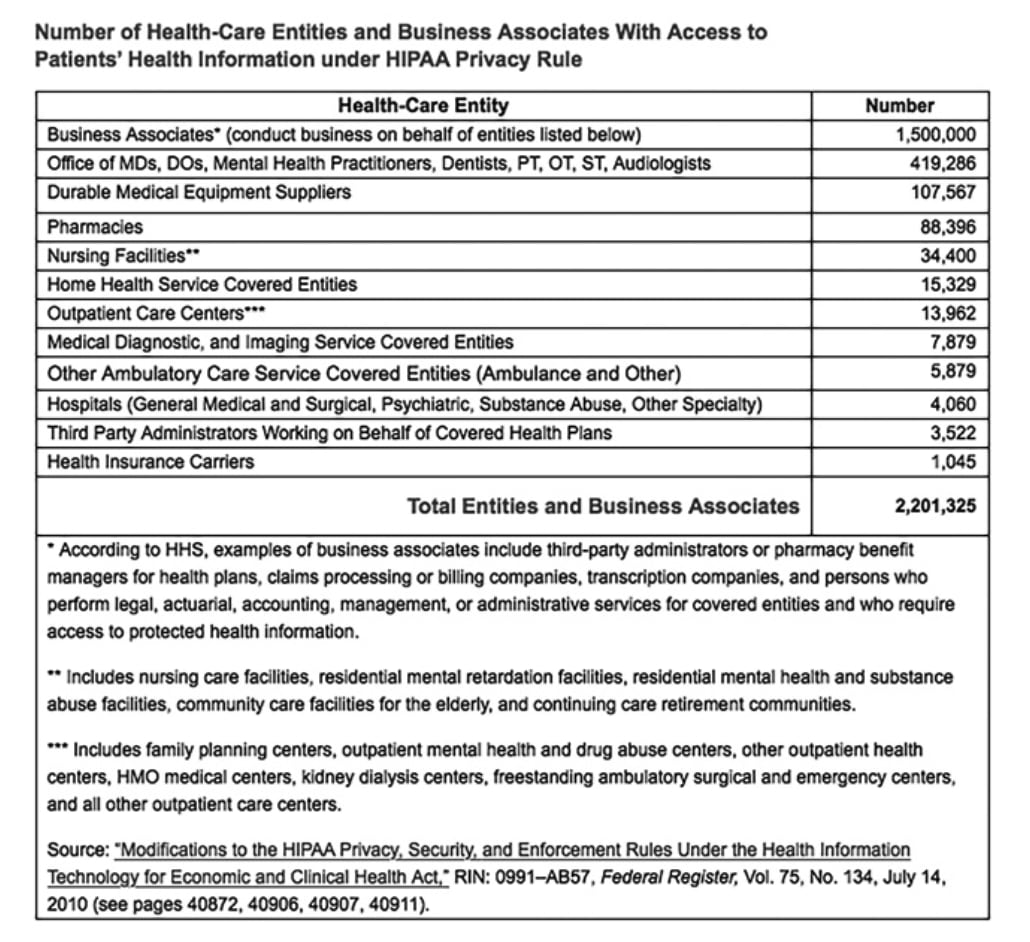

That includes not just hospitals, pharmacy systems, and insurance companies, but billing contractors, analytics firms, and all kinds of third-party vendors. According to a 2010 Department of Health and Human Services (HHS) regulation, there are more than 2.2 million entities (covered entities and business associates) with which your personally identifiable, sensitive medical information can be shared, if those who hold it choose to share it. This number doesn’t even include government entities with access to your data, because they aren’t considered covered entities or business associates.

Your data doesn’t stay in the clinic. It gets passed upstream, without your knowledge and without needing your consent. No one needs to notify you when your data is shared. And you're not allowed to opt out. You can’t even get a list of everyone it’s been shared with. It’s just… out there.

The doctor may think they’re just "adding it to your chart". But what they’re actually doing is feeding a giant, invisible machine that exists far beyond that exam room.

We have an entire video diving into the details if you’re interested: You Have No Medical Privacy

Data breaches

Legal sharing isn’t the only risk of this accumulated data. What about data breaches? This part is almost worse.

Healthcare systems are one of the top targets for ransomware attacks. That’s because the data they hold is extremely valuable. Full names, birth dates, Social Security numbers, medical histories, and billing information, all in one place.

It’s hard to find a major health system that hasn’t been breached. In fact, a 2023 report found that over 90% of healthcare organizations surveyed had experienced a data breach in the past three years.

That means if you’ve been to the doctor in the last few years, there’s a very real chance that some part of your medical file is already floating around, whether on the dark web, in a leaked ransomware dump, or being sold to data brokers.

The consequences aren’t just theoretical. In one high-profile case of such a healthcare breach, people took their own lives after private details from their medical files were leaked online.

So when your doctor says, “This is just for your chart,” understand what that really means. You’re not just trusting your doctor. You’re trusting a system that has a track record of failing to protect you.

What happens when trust breaks

Once you start becoming aware of how your data is being collected and shared, you see it everywhere. And in high-stakes moments, like a medical visit, pushing back is hard. You’re at your most vulnerable. And the power imbalance becomes really obvious.

So what do patients do when they feel that their trust has been violated? They start holding back. They say less. They censor themselves.

This is exactly the opposite of what should happen in a healthcare setting. Your relationship with your doctor is supposed to be built on trust. But when you tell your doctor something in confidence, and they say, “I’m going to log it anyway,” that trust is gone.

The problem here isn’t just one doctor. From their perspective, they’re doing what’s expected of them. The entire system is designed to prioritize documentation and compliance over patient privacy.

Privacy is about consent, not secrecy

But privacy matters. And not because you have something to hide. You might want your doctor to have full access to everything. That’s fine. But the point is, you should be the one making that call.

Right now, that choice is being stripped away by systems and policies that normalize forced disclosure.

We’re being told our preferences don’t matter. That our data isn’t worth protecting. And we’re being conditioned to stay quiet about it.

That has to change.

So what can you do?

First and foremost, if you’re in a high-stakes medical situation, focus on getting the care you need. Don’t let privacy concerns keep you from getting help.

But when you do have space to step back and ask questions, do it. That’s where change begins.

- Ask what data is necessary and why.

- Say no when something feels intrusive.

- Let your provider know that you care about how your data is handled.

- Support policy efforts that restore informed consent in healthcare.

- Share your story, because this isn’t just happening to one person.

The more people push back, the harder it becomes for the system to ignore us.

You should be able to go to the doctor and share what’s relevant, without wondering who’s going to have access to that information later.

The exam room should feel safe. Right now, it doesn’t.

Healthcare is in urgent need of a privacy overhaul. Let’s make that happen.

Yours In Privacy,

Naomi