Challenging the myth

"I have nothing to hide". It’s a phrase we’ve all heard, and perhaps even said ourselves, when privacy comes up. But it reveals a dangerous misunderstanding of what privacy is and why it matters.

Privacy isn’t about hiding—it’s about control. It’s about having the freedom to decide who gets access to your data and how it’s used. Over the last decade, that freedom has eroded. Today, governments, corporations, and hackers routinely collect and exploit our personal information, often without our consent.

Worse still, the narrative around privacy has shifted. Those who value it are seen as secretive, even criminal, while surveillance is sold to us as a tool for safety and transparency. This mindset benefits only those who profit from our data.

It’s time to push back. Here are 10 arguments you can use the next time someone says, "I have nothing to hide".

1. Privacy is about consent, not secrecy

Privacy isn’t about hiding secrets—it’s about having control over your information. It’s the ability to decide for yourself who gets access to your data.

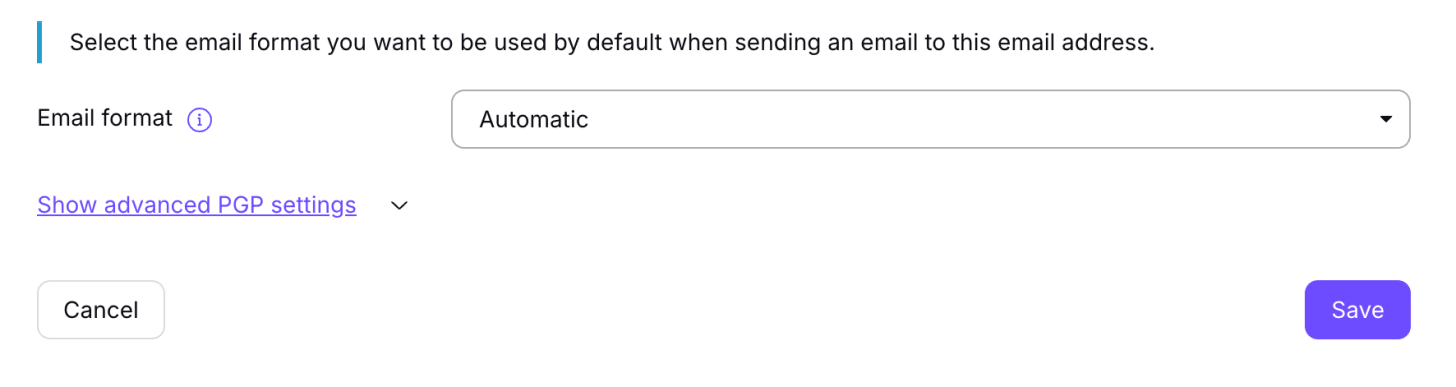

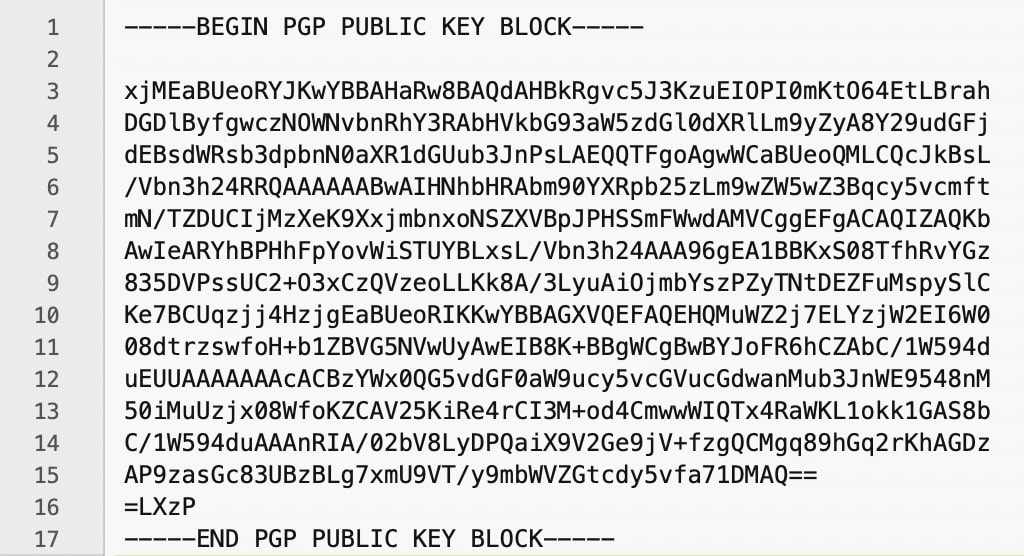

We don’t have to hand over all our personal information just because it’s requested. Tools like email aliases, VoIP numbers, and masked credit cards allow us to protect our data while still using online services. Privacy-focused companies like ProtonMail or Signal respect this principle, giving you more control over your information.

2. Nothing to hide, everything to protect

Even if you think you have nothing to hide, you have everything to protect. Oversharing data makes you vulnerable to hackers, scammers, and malicious actors.

For example:

- Hackers can use personal details like your home address or purchase history to commit fraud or even locate you.

- Data brokers can manipulate you with targeted content and even influence your political beliefs, as seen in the Cambridge Analytica scandal.

Protecting your data is about safeguarding yourself from these threats and protecting your autonomy.

3. Your data is forever

Data collected about you today will still exist decades from now. Governments change, laws evolve, and what’s harmless now could be used against you or your children in the future.

Surveillance infrastructure rarely disappears once it’s built. Limiting the data collected about you now is essential for protecting yourself from unknown risks down the line.

4. It’s not about you

Privacy isn’t just a personal issue—it’s about protecting others. Activists, journalists, and whistleblowers rely on privacy to do their work safely. By dismissing privacy, you’re ignoring the people for whom it’s a matter of life and death.

For example, Pegasus spyware has been used to track and silence journalists and activists. We should be leaning in to privacy tools, supporting the privacy ecosystem, and ensuring that those helping to keep our society free and safe are protected, whether we personally feel like we need privacy or not.

5. Surveillance isn’t about criminals

The claim that surveillance is "only for catching bad guys" is a myth. Once surveillance tools are deployed, they almost always expand beyond their original purpose.

History has shown how governments use surveillance to target dissenters, minorities, and anyone challenging the status quo. Privacy isn’t just for criminals—it’s a safeguard against abuse of power.

6. Your choices put others at risk

When you disregard privacy, you expose not just yourself but also the people around you.

For example:

- Using apps that access your contact list can leak your friends’ and family’s phone numbers and addresses without their consent.

- Insisting on non-private communication tools can expose sensitive conversations to surveillance or data breaches.

- Uploading your photos to a non-private cloud like Google Drive allows those in your photos to be identified using facial recognition, and profiled based on information Google AI sees in your photos.

Respecting privacy isn’t just about protecting yourself—it’s about respecting the privacy boundaries of others.

7. Privacy is not dead

For some people, "I have nothing to hide" is a coping mechanism.

"Privacy is dead, so why bother?"

This defeatist attitude is both false and harmful. Privacy is alive—it’s a choice we can make every day. Let’s stop disempowering others by convincing them they shouldn’t even try.

There are countless privacy tools you can incorporate into your life. By choosing these tools, you take back control over your information and send a clear message that privacy matters.

8. Your data can be weaponized

All it takes is one bad actor—a rogue employee, an ex-partner, or a hacker—to turn your data against you. From revenge hacking to identity theft, the consequences of oversharing are real and dangerous.

Limiting the amount of data collected about you reduces your vulnerability and makes it harder for others to exploit your information.

9. Surveillance stifles creativity and dissent

Surveillance doesn’t just invade your privacy—it affects how you think and behave. Studies show that people censor themselves when they know they’re being watched.

This "chilling effect" stifles creativity, innovation, and dissent. Without privacy, we lose the ability to think freely, explore controversial ideas, and push back against authority.

10. Your choices send a signal

Every decision you make about technology sends a message. Choosing privacy-focused companies tells the market, "This matters". It encourages innovation and creates demand for tools that protect individual freedom.

Conversely, supporting data-harvesting companies reinforces the status quo and pushes privacy-focused alternatives out of the market. When people say “I have nothing to hide” instead of leaning into the privacy tools around them, it ignores the role we all play in shaping the future of society.

Takeaways: Why privacy matters

- Privacy is about consent, not secrecy. It’s your right to control who accesses your data.

- You have everything to protect. Data breaches and scams are real threats.

- Data is forever. What’s collected today could harm you tomorrow.

- Privacy protects others. Journalists and activists depend on it to do their work safely.

- Surveillance tools expand. They rarely stop at targeting criminals.

- Your choices matter. Privacy tools send a message to the market and inspire change.

- Privacy isn’t dead. We have tools to protect ourselves—it’s up to us to use them.

A fight we can’t afford to lose

Privacy isn’t about hiding—it’s about protecting your rights, your choices, and your future. Surveillance is a weapon that can silence opposition, suppress individuality, and enforce conformity. Without privacy, we lose the freedom to dissent, innovate, and live without fear.

The next time someone says, "I have nothing to hide", remind them: privacy is normal. It’s necessary. And it’s a fight we can’t afford to lose.

Yours in privacy,

Naomi