The other day I attended a conference hosted by an international software company. The presenters came from various countries, all speaking in English. English is the corporate language. But none of them were native English speakers, which was obvious. It made me think about how strange it is when people spend most of their time communicating in a language they don't fully know and can never really master. All the nuances are lost, all the linguistic creativity, the ambiguity, the unspoken words, the hidden sarcasm, and the secret humour.

Shortly after, I was coaching one of my students, a German software specialist who speaks quite decent English, but still, we spent a lot of time figuring out exactly what he meant by a particular paragraph. I asked him if he couldn't just tell me in German. "Do you speak German?" he asked. "Well, I learned it in high school," I replied, "but it might be a stretch to say that means I know German." Then we just laughed. Eventually, we managed to resolve the issue, in English, which neither of us speaks perfectly. Did we understand the paragraph in the same way? Surely not.

Being able to speak any language, but understand none

But now we have artificial intelligence. And whether we like it better or worse, AI is considerably better at English than almost every non-native speaker, and indeed a large part of those as well. The style is admittedly flat, but the same applies when people express themselves in a language they don't fully master. Soon, we can expect technology to have generally reached the level where in phone calls and remote meetings we can simply speak our own mother tongue, and let artificial intelligence instantly translate the content into any other language.

AI is considerably better at English than almost every non-native speaker, and indeed a large part of those as well.

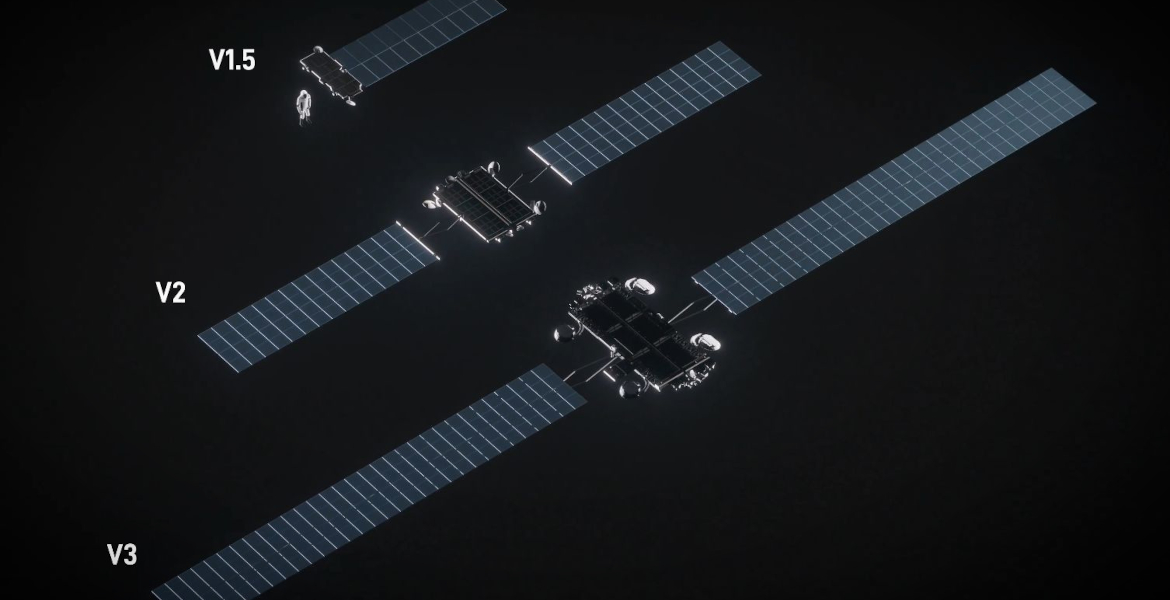

The Swede working in the international software company then simply speaks Swedish to the Ukrainian or Frenchman, and they just hear the Ukrainian or French version and respond in their own language. And when Elon Musk or those others now working hard to develop brain chips have progressed further, it might even be enough to press a button on the remote brain control we will soon all carry, to switch languages and speak French, Ukrainian, Swahili, or Hindi as needed. But of course, without understanding a word of what comes out of our mouths.