This week, a devastating breach exposed tens of thousands of users of Tea, a dating safety app that asked women to verify their identity with selfies, government IDs, and location data.

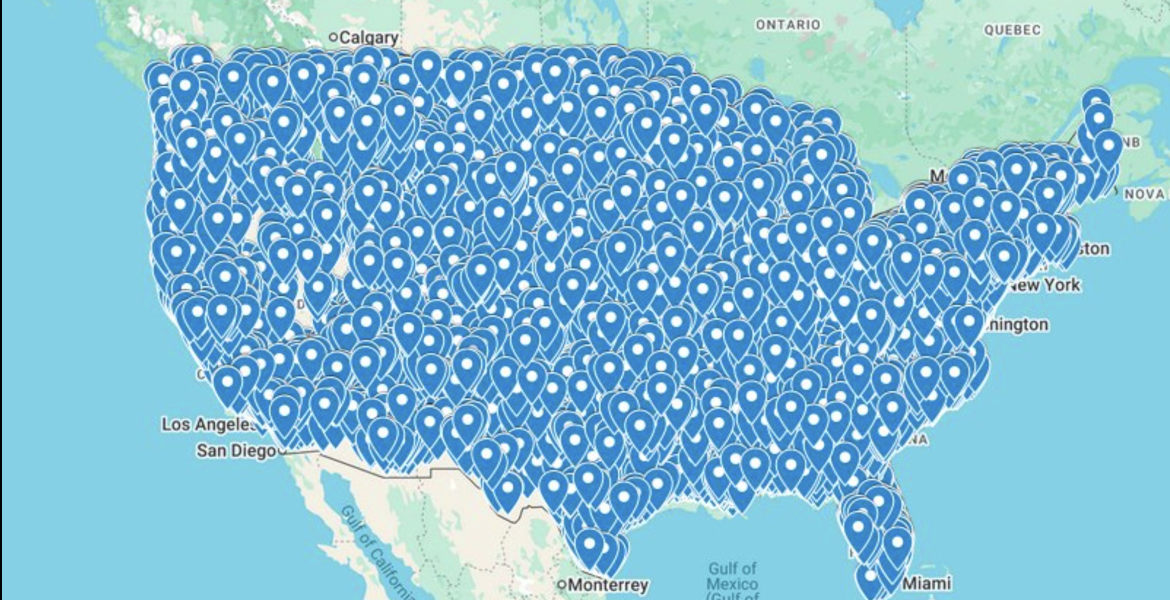

Over 72,000 images were found in a publicly accessible Firebase database. No authentication required. 4chan users discovered the open bucket and immediately began downloading and sharing the contents: face scans, driver’s licenses, and private messages. Some users have already used the leaked IP addresses to build and circulate maps that attempt to track and trace the women in those files.

Tea confirmed the breach, claiming the data came from a legacy system. But that doesn’t change the core issue:

This data never should have been collected in the first place.

What’s marketed as safety often doubles as surveillance

Tea is just one example of a broader trend: platforms claiming to protect you while quietly collecting as much data as possible. “Verification” is marketed as a security feature, something you do for your own good. The app was pitched as a tool to help women vet potential dates, avoid abuse, and stay safe. But in practice, access required handing over deeply personal data. Face scans, government-issued IDs, and real-time location information became the price of entry.

This is how surveillance becomes palatable. The language of “just for verification” hides the reality. Users are given no transparency about where their data is stored, how long it is kept, or who can access it. These aren’t neutral design choices. They are calculated decisions that prioritize corporate protection, not user safety.

We need to talk about KYC

What happened with Tea reflects a much bigger issue. Identification is quietly becoming the default requirement for access to the internet. No ID? No entry. No selfie? No account. This is how KYC culture has expanded, moving far beyond finance into social platforms, community forums, and dating apps.

We’ve been taught to believe that identity verification equals safety. But time and again, that promise falls apart. Centralized databases get breached, IP addresses are logged and weaponized, and photos meant for internal review end up archived on the dark web.

If we want a safer internet, we need to stop equating surveillance with security. The real path to safety is minimizing what gets collected in the first place. That means embracing pseudonyms, decentralizing data, and building systems that do not rely on a single gatekeeper to decide who gets to participate.

“Your data will be deleted”. Yeah right.

Tea’s privacy policy stated in black and white:

Selfies and government ID images “will be deleted immediately following the completion of the verification process”.

Yet here we are. Over 72,000 images are now circulating online, scraped from an open Firebase bucket. That’s a direct contradiction of what users were told. And it’s not an isolated incident.

This kind of betrayal is becoming disturbingly common. Companies collect high-risk personal data and reassure users with vague promises:

“We only keep it temporarily”.

“We delete it right after verification”.

“It’s stored securely”.

These phrases are repeated often, to make us feel better about handing over our most private information. But there’s rarely any oversight, and almost never any enforcement.

At TSA checkpoints in the U.S., travelers are now being asked to scan their faces. The official line? The images are immediately deleted. But again, how do we know? Who verifies that? The public isn’t given access to the systems handling those scans. There’s no independent audit, no transparency, and we’re asked to trust blindly.

The truth is, we usually don’t know where our data goes. “Just for verification” has become an excuse for massive data collection. And even if a company intends to delete your data, it still exists long enough to be copied, leaked, or stolen.

Temporary storage is still storage.

This breach shows how fragile those assurances really are. Tea said the right things on paper, but in practice, their database was completely unprotected. That’s the reality behind most “privacy policies”: vague assurances, no independent oversight, and no consequences when those promises are broken.

KYC pipelines are a perfect storm of risk. They collect extremely sensitive data. They normalize giving it away. And they operate behind a curtain of unverifiable claims.

It’s time to stop accepting “don’t worry, it’s deleted” as a substitute for actual security. If your platform requires storing sensitive personal data, that data becomes a liability the moment it is collected.

The safest database is the one that never existed.

A delicate cultural moment

This story has touched a nerve. Tea was already controversial, with critics arguing it enabled anonymous accusations and blurred the line between caution and public shaming. Some see the breach as ironic, even deserved.

But that is not the lesson we should take from this.

The breach revealed how easily identity exposure has become normalized, how vulnerable we all are when ID verification is treated as the default, and how quickly sensitive data becomes ammunition once it slips out of the hands of those who collected it.

It’s a reminder that we are all vulnerable in a world that demands ID verification just to participate in daily life.

This isn’t just about one app’s failure. It’s a reflection of the dangerous norms we’ve accepted.

Takeaways

- KYC is a liability, not a security measure. The more personal data a platform holds, the more dangerous a breach becomes.

- Normalizing ID collection puts people at risk. The existence of a database is always a risk, no matter how noble the intent.

- We can support victims of surveillance without endorsing every platform they use. Privacy isn’t conditional on whether we like someone or not.

- It’s time to build tools that don’t require identity. True safety comes from architectures that protect by design.

Let this be a wake-up call. Not just for the companies building these tools, but for all of us using them. Think twice before handing over your ID or revealing your IP address to a platform you use.

Yours in privacy,

Naomi