An American start-up company has successfully tested a device that can purify water contaminated with the persistent PFAS compounds, according to a report in GeekWire magazine.

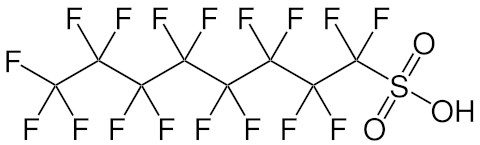

PFAS are synthetically produced substances found in everything from food packaging to hygiene products; some of these substances can be harmful to both health and the environment. Because PFAS compounds are so difficult to break down, they are sometimes referred to as "forever chemicals". These substances can leak from a range of products and contaminate, among other things, drinking water. They have also been detected in breast milk, and it has previously been challenging to find effective methods to remove them.

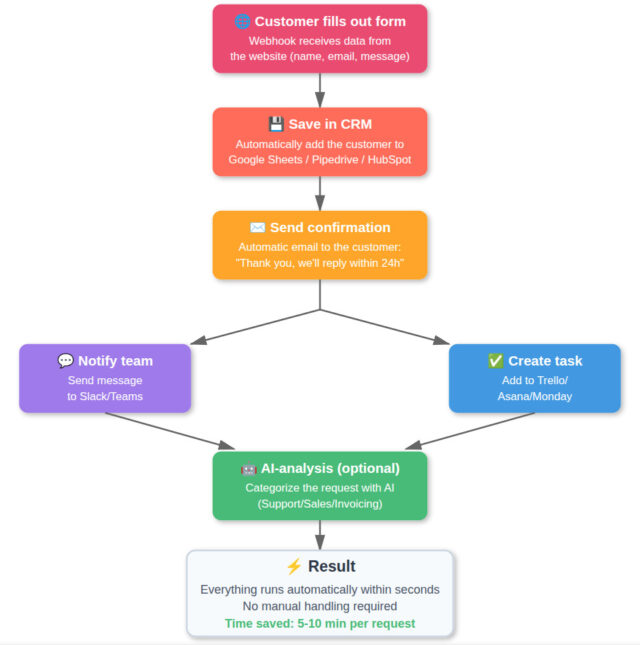

The American start-up Aquagga has now developed a PFAS destruction unit, affectionately named "Eleanor", which was recently tested at Fairbanks Airport in Alaska. There, they purified tens of thousands of liters of wastewater that had been contaminated with PFAS for 40 years.

– PFAS is incredibly tough to break down and deal with, Nigel Sharp, the company's CEO, told GeekWire. So we’re very fortunate we’ve validated the technology works, and we’re now to the point of [going to] commercial scale and growth of the company.

The PFAS destruction unit incorporates technology developed at the University of Washington and the Colorado School of Mines. The device uses very high pressure and temperatures of up to about 300 degrees Celsius. Lye is then added, an ingredient found in soap, to create a corrosive environment. This is intended to break down the PFAS compounds by breaking the bond in its head, chopping up its backbone of carbon molecules, and cutting off the fluoride molecules that run along the spine.

The free fluoride is then combined with calcium or sodium to form less harmful compounds, ultimately similar to the fluorination used in many toothpastes.

– Testing shows that more than 99% of the PFAS are destroyed in treated water, says Sharp.

Elise Thomas, head of the environmental program at Fairbanks Airport, is pleased with the results and believes the system will attract more facilities struggling with PFAS contamination.

– It gives us hope, says Thomas – and gives us something to look forward to.

PFAS, or Per- and polyfluoroalkyl substances, are synthetic chemicals found in products like food packaging and non-stick cookware. Often called "forever chemicals" due to their resistance to breakdown, they can contaminate drinking water, soil, and even appear in human tissues. Their presence raises health concerns, including hormonal disruption and an increased cancer risk. Eliminating or neutralizing them in contaminated areas has long been a challenge.