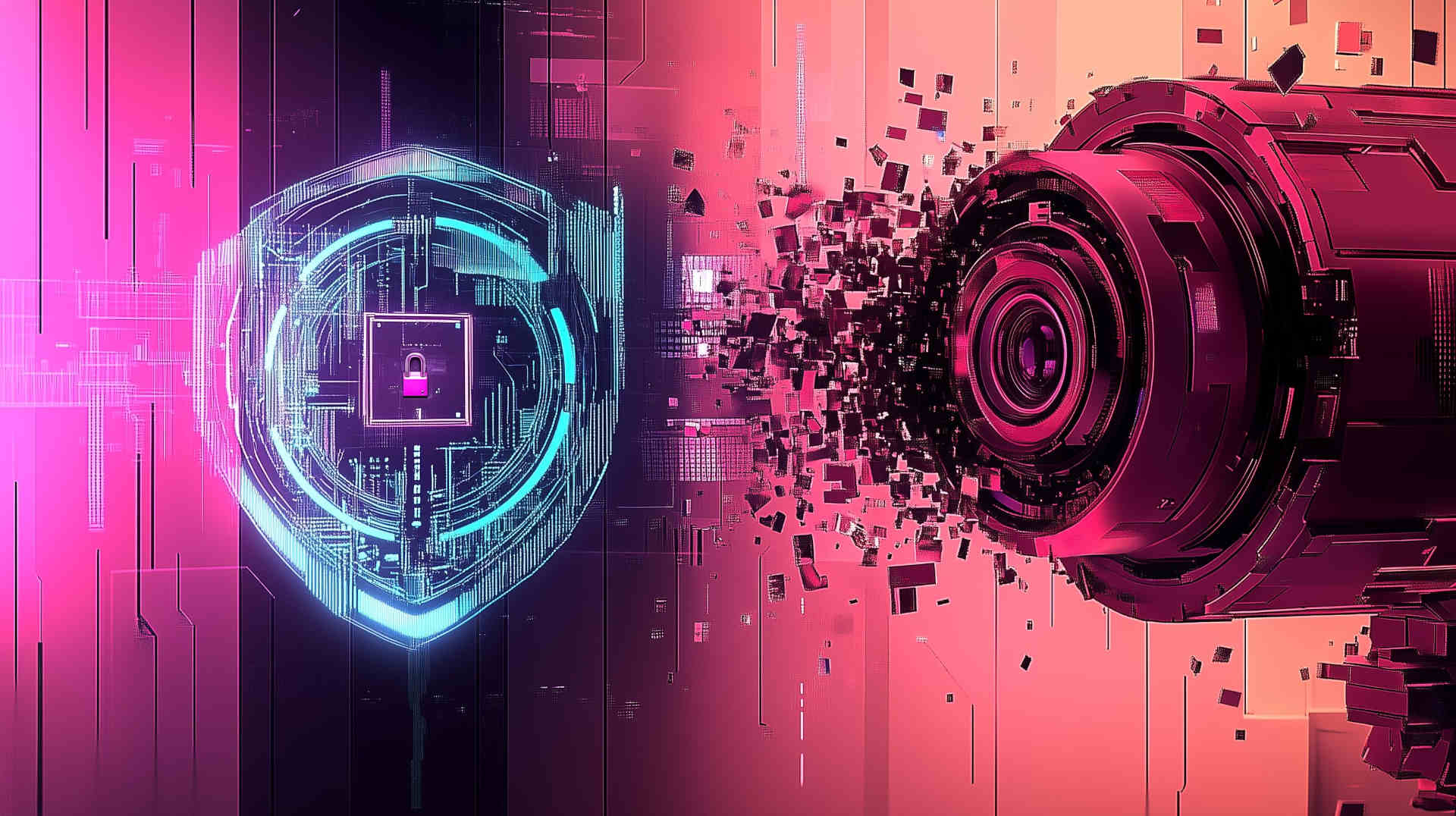

Let’s be honest: data is useful. But we’re constantly told that in order to benefit from modern tech—and the insights that come with it—we have to give up our privacy. That useful data only comes from total access. That once your info is out there, you’ve lost control. That there’s no point in trying to protect it anymore.

These are myths. And they’re holding us back.

The truth is, you can benefit from data-driven tools without giving away everything. You can choose which companies to trust. You can protect one piece of information while sharing another. You can demand smarter systems that deliver insights without exploiting your identity.

Privacy isn’t about opting out of technology—it’s about choosing how you engage with it.

In this issue, we’re busting four of the most common myths about data collection. Because once you understand what’s possible, you’ll see how much power you still have.

Myth #1: "I gave data to one company, so my privacy is already gone".

This one is everywhere. Once people sign up for a social media account or share info with a fitness app, they often throw up their hands and say, "Well, I guess my privacy’s already gone".

But that’s not how privacy works.

Privacy is about choice. It’s about context. It’s about setting boundaries that make sense for you.

Just because you’ve shared data with one company doesn’t mean you’re giving blanket permission to every app, government agency, or ad network to track you forever.

You’re allowed to:

- Share one piece of information and protect another.

- Say yes to one service and no to others.

- Change your mind, rotate your identifiers, and reduce future exposure.

Privacy isn’t all or nothing. And it's never too late to take some power back.

Myth #2: "If I give a company data, they can do whatever they want with it".

Not if you pick the right company.

Many businesses are committed to ethical data practices. Some explicitly state in their terms that they’ll never share your data, sell it, or use it outside the scope of the service you signed up for.

Look for platforms that don’t retain unnecessary data. There are more of them out there than you think.

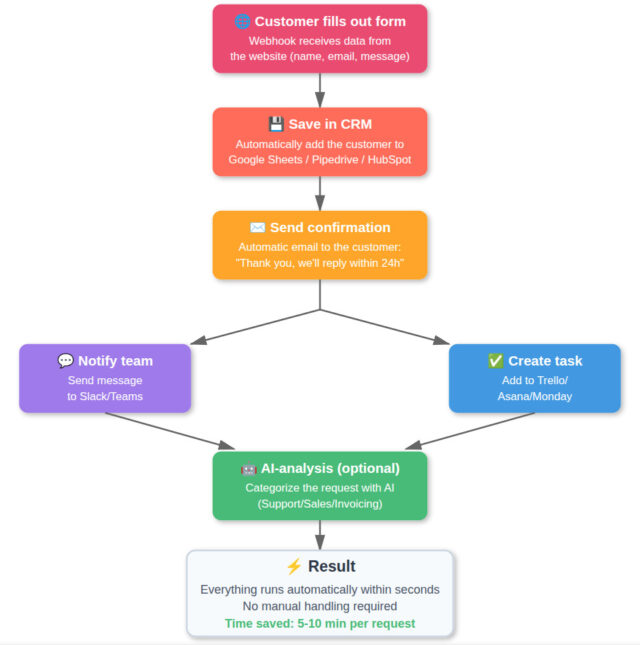

Myth #3: "To get insights, a company needs to see my data".

This one’s finally starting to crumble—thanks to game-changing tech like homomorphic encryption.

Yes, really: companies can now do compute on encrypted data without ever decrypting it.

It’s already in use in financial services, research, and increasingly, consumer apps. It proves that privacy and data analysis can go hand in hand.

Imagine this: a health app computes your sleep averages, detects issues, and offers recommendations—without ever seeing your raw data. It stays encrypted the whole time.

We need to champion this kind of innovation. More research. More tools. More adoption. And more support for companies already doing it—because our business sends a signal that this investment was worth it for them, and encourages other companies to jump on board.

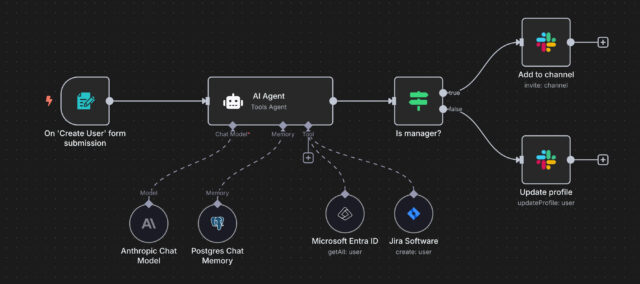

Myth #4: “To prove who you are, you have to hand over sensitive data.”

You’ve heard this from banks, employers, and government forms: "We need your full ID to verify who you are".

But here’s the problem: every time we hand over sensitive data, we increase our exposure to breaches and identity theft. It’s a bad system.

There’s a better way.

With zero-knowledge proofs, we can prove things like being over 18, or matching a record—without revealing our address, birthdate, or ID number.

The tech already exists. But companies and institutions are slow to adopt it or even recognize it as legitimate. This won’t change until we demand better.

Let’s push for a world where:

- Our identity isn’t a honeypot for hackers.

- We can verify ourselves without becoming vulnerable.

- Privacy-first systems are the norm—not the exception.

Takeaways

The idea that we have to trade privacy for progress is a myth. You can have both. The tools exist. The choice is ours.

Privacy isn’t about hiding—it’s about control. You can choose to share specific data without giving up your rights or exposing everything.

Keep these in mind:

- Pick tools that respect you. Look for platforms with strong privacy practices and transparent terms.

- Use privacy-preserving tech. Homomorphic encryption and zero-knowledge proofs are real—and growing.

- Don’t give up just because you shared once. Privacy is a spectrum. You can always take back control.

- Talk about it. The more people realize they have options, the faster we change the norm.

Being informed doesn’t have to mean being exploited.

Let’s demand better.

Yours in privacy,

Naomi