DEFCON is the world’s largest hacker conference. Every year, tens of thousands of people gather in Las Vegas to share research, run workshops, compete in capture-the-flag tournaments, and break things for sport. It’s a subculture. A testing ground. A place where some of the best minds in security and privacy come together not just to learn, but to uncover what’s being hidden from the rest of us. It’s where curiosity runs wild.

But to really get DEFCON, you have to understand the people.

What is a hacker?

I love hacker conferences because of the people. Hackers are notoriously seen as dangerous. The stereotype is that they wear black hoodies and Guy Fawkes masks.

But that’s not why they’re dangerous: They’re dangerous because they ask questions and have relentless curiosity.

Hackers have a deep-seated drive to learn how things work, not just at the surface, but down to their core.

They aren't content with simply using tech. They want to open it up, examine it, and see the hidden gears turning underneath.

A hacker sees a device and doesn’t just ask, “What does it do?”

They ask, “What else could it do?”

“What isn’t it telling me?”

“What’s under the hood, and why does no one want me to look?”

They’re curious enough to pull back curtains others want to remain closed.

They reject blind compliance and test boundaries.

When society says "Do this," hackers ask “Why?"

They don’t need a rulebook or external approval.

They trust their own instincts and intelligence.

They’re guided by internal principles, not external prescriptions.

They’re not satisfied with the official version. They challenge it.

Because of this, hackers are often at the fringes of society. They’re comfortable with being misunderstood or even vilified. Hackers are unafraid to reveal truths that powerful entities want buried.

But that position outside the mainstream gives them perspective: They see what others miss.

Today, the word “hack” is everywhere:

Hack your productivity.

Hack your workout.

Hack your life.

What it really means is:

Don’t accept the defaults.

Look under the surface.

Find a better way.

That’s what makes hacker culture powerful.

It produces people who will open the box even when they’re told not to.

People who don’t wait for permission to investigate how the tools we use every day are compromising us.

That insistence on curiosity, noncompliance, and pushing past the surface to see what’s buried underneath is exactly what we need in a world built on hidden systems of control.

We should all aspire to be hackers, especially when it comes to confronting power and surveillance.

Everything is computer

Basically every part of our lives runs on computers now.

Your phone. Your car. Your thermostat. Your TV. Your kid’s toys.

And much of this tech has been quietly and invisibly hijacked for surveillance.

Companies and governments both want your data. And neither want you asking how these data collection systems work.

We’re inside a deeply connected world, built on an opaque infrastructure that is extracting behavioral data at scale.

You have a right to know what’s happening inside the tech you use every day.

Peeking behind the curtain is not a crime. It’s a public service.

In today’s world, the hacker mindset is not just useful. It’s necessary.

Hacker culture in a surveillance world

People who ask questions are a nightmare for those who want to keep you in the dark.

They know how to dig.

They don’t take surveillance claims at face value.

They know how to verify what data is actually being collected.

They don’t trust boilerplate privacy policies or vague legalese.

They reverse-engineer SDKs.

They monitor network traffic.

They intercept outgoing requests and inspect payloads.

And they don’t ask for permission.

That’s what makes hacker culture so important. If we want any hope of reclaiming privacy, we need people with the skills and the willingness to pull apart the systems we’re told not to question.

On top of that, governments and corporations both routinely use outdated and overbroad legislation like the Computer Fraud and Abuse Act (CFAA) to prosecute public-interest researchers who investigate tech. Not because those researchers cause harm, but because they reveal things that others want kept hidden.

Laws like this pressure people towards compliance, and make them afraid to ask questions. The result is that curiosity feels like a liability, and it becomes harder for the average person to understand how the digital systems around us actually work.

That’s why the hacker mindset matters so much: Because no matter how hard the system pushes back, they keep asking questions.

The researchers I met at DEFCON

This year at DEFCON, I met researchers who are doing exactly that.

People uncovering surveillance code embedded in children's toys.

People doing analysis on facial recognition SDKs.

People testing whether your photo is really deleted after “verification”.

People capturing packets who discovered that the “local only” systems you're using aren’t local at all, and are sending your data to third parties.

People analyzing “ephemeral” IDs, and finding that your data was being stored and linked back to real identities.

You’ll be hearing from some of them on our channel in the coming months.

Their work is extraordinary, and helping all of us move towards a world of informed consent instead of blind compliance. Without this kind of research, the average person has no way to know what’s happening behind the scenes. We can’t make good decisions about the tech we use if we don’t know what it’s doing.

Make privacy cool again

Making privacy appealing is not just about education.

It’s about making it cool.

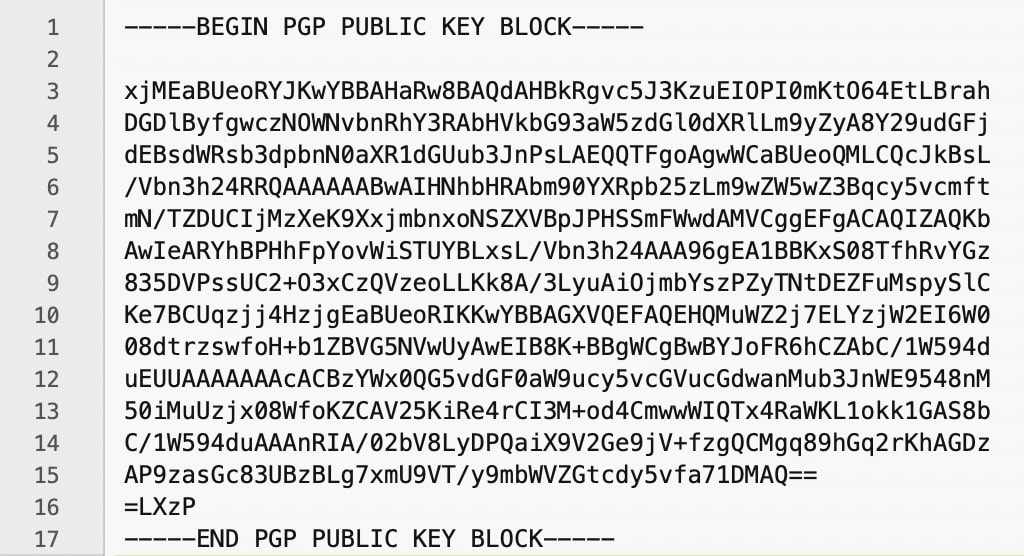

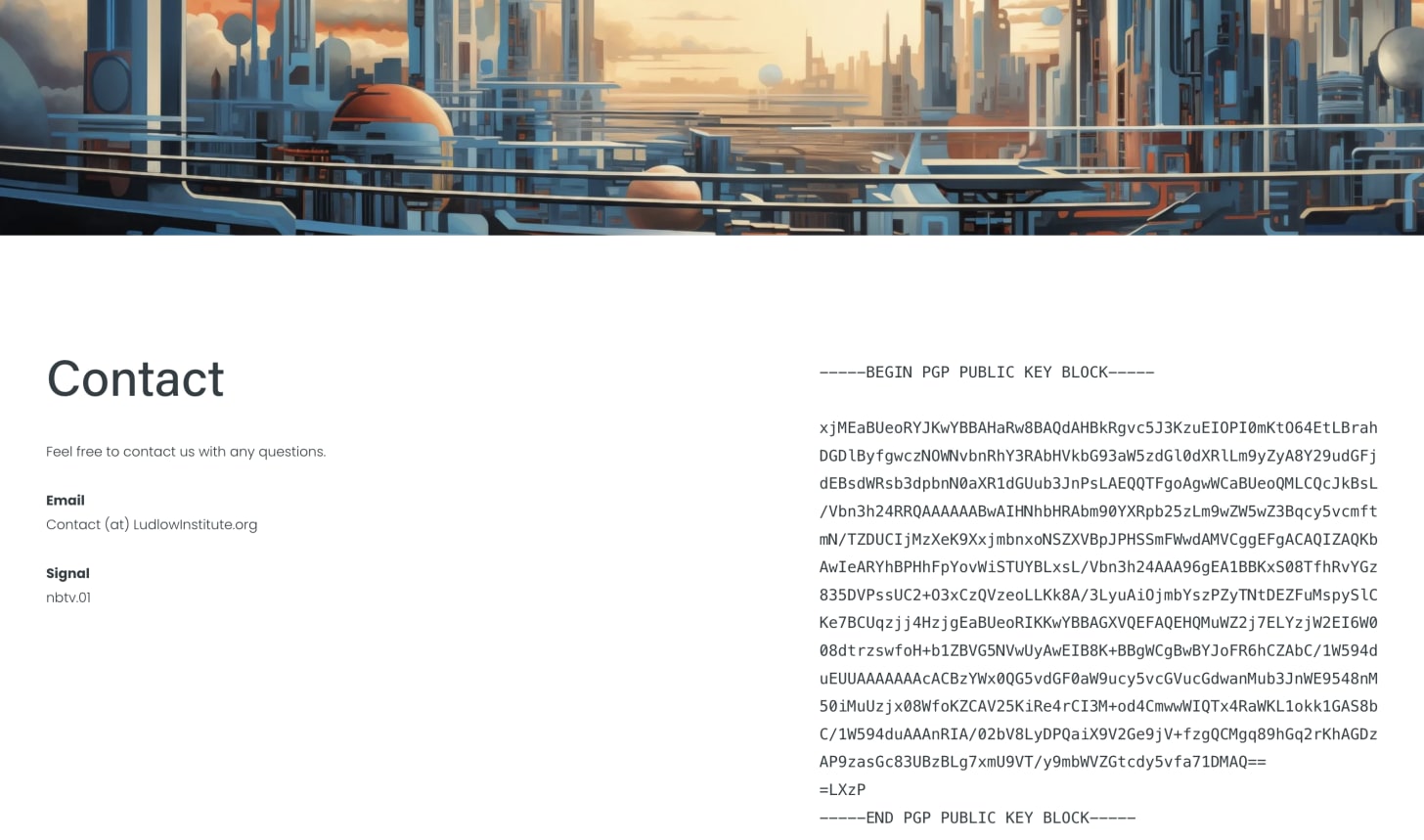

Hacker culture has always been at the forefront of turning fringe ideas into mainstream trends. Films like Hackers and The Matrix made hackers a status symbol. Movements like The Crypto Wars (when the government fought Phil Zimmermann over PGP), and the Clipper Chip fights (when they tried to standardize surveillance backdoors across hardware) made cypherpunks and privacy activists aspirational.

Hackers take the things mainstream culture mocks or fears, and make them edgy and cool.

That’s what we need here. We need a cultural transformation and to push back against the shameful language that demands we justify our desire for privacy.

You shouldn’t have to explain why you don’t want to be watched.

You shouldn’t have to defend your decision to protect your communications.

Make privacy a badge of honor.

Make privacy tools a status symbol.

Make the act of encrypting, self-hosting, and masking your identity a signal that says you’re independent, intelligent, and not easily manipulated.

Show that the people who care about privacy are the same people who invent the future.

Most people don’t like being trailblazers, because it’s scary. But if you’re reading this, you’re one of the early adopters, which means you’re already one of the fearless ones.

When you take a stand visibly, you create a quorum and make it safer for others to join in. That’s how movements grow, and we go from being weirdos in the corner to becoming the majority.

If privacy is stigmatized, reclaiming it will take bold, fearless, visible action.

The hacker community is perfectly positioned to lead that charge, and to make it safe for the rest of the world to follow.

When you show up and say, “I care about this,” you give others permission to care too.

Privacy may be on the fringe right now, but that’s where all great movements begin.

Final Thoughts

What I learnt at DEFCON is that curiosity is powerful.

Refusal to comply is powerful.

The simple act of asking questions can be revolutionary.

There are systems all around us extracting data and consolidating control, and most people don’t know how to fight that, and are too scared to try.

Hacker culture is the secret sauce.

Let’s apply this drive to the systems of surveillance.

Let’s investigate the tools we’ve been told to trust.

Let’s explain what’s actually happening.

Let’s give people the knowledge they need to make better choices.

Let’s build a world where curiosity isn’t criminalized but celebrated.

DEFCON reminded me that we don’t need to wait for permission to start doing that.

We can just do things.

So let’s start now.

Yours in privacy,

Naomi