A few weeks ago, we published an article about oversharing on social media, and how posting photos, milestones, and personal details can quietly build a digital footprint for your child that follows them for life.

But social media isn’t the only culprit.

Today, I want to talk about the devices we give our kids: the toys that talk, the tablets that teach, the monitors that watch while they sleep.

These aren’t just tools of convenience or connection. Often, they’re Trojan horses, collecting and transmitting data in ways most parents never realize.

We think we’re protecting our kids.

But in many cases, we’re signing them up for surveillance systems they can’t understand, and wouldn’t consent to if they could.

How much do you know about the toys your child is playing with?

What data are they collecting?

With whom are they sharing it?

How safely are they storing it to protect against hackers?

Take VTech, for example — a hugely popular toy company, marketed as safe, educational, and kid-friendly.

In 2015, VTech was hacked. The breach wasn’t small:

- 6.3 million children’s profiles were exposed, along with nearly 5 million parent accounts

- The stolen data included birthdays, home addresses, chat logs, voice recordings… even photos children had taken on their tablets

Terms no child can understand—but every parent accepts

It’s not just hackers we should be mindful of — often, these companies are allowed to do almost anything they want with the data they collect, including selling it to third parties.

When you hand your child a toy that connects to Wi-Fi or Bluetooth, you might be agreeing to terms that say:

- Their speech can be used for targeted advertising

- Their conversations may be retained indefinitely

- The company can change the terms at any time, without notice

And most parents will never know.

"Safe" Devices With Open Doors

What about things like baby monitors and nanny cams?

Years ago, we did a deep dive into home cameras, and almost all popular models were built without end-to-end encryption. That means the companies that make them can access your video feed.

How much do you know about that company?

How well do you trust every employee who might be able to access that feed?

But it’s not just insiders you should worry about.

Many of these kiddy cams are notoriously easy to hack. The internet is full of real-world examples of strangers breaking into monitors, watching, and even speaking to infants.

There are even publicly available tools that scan the internet and map thousands of unsecured camera feeds, sortable by country, type, and brand.

If your monitor isn’t properly secured, it’s not just vulnerable — it’s visible.

Mozilla, through its Privacy Not Included campaign, audited dozens of smart home devices and baby monitors. They assessed whether products had basic security features like encryption, secure logins, and clear data-use policies. The verdict? Even many top-selling monitors had zero safeguards in place.

These are the products we’re told are protecting our kids.

Apps that glitch, and let you track other people’s kids

A T-Mobile child-tracking app recently glitched.

A mother refreshed the screen—expecting to see her kids’ location.

Instead, she saw a stranger’s child. Then another. Then another.

Each refresh revealed a new kid in real time.

The app was broken, but the consequences weren’t abstract.

That’s dozens of children’s locations broadcast to the wrong person.

The feature that was supposed to provide control did the opposite.

Schools are part of the problem, too

Your child’s school likely collects and stores sensitive data—without strong protections or meaningful consent.

- In Virginia, thousands of student records were accidentally made public

- In Seattle, a mental health survey led to deeply personal data being stored in unsecured systems

And it’s not just accidents.

A 2015 study investigated “K–12 data broker” marketplaces that trade in everything from ethnicity and affluence to personality traits and reproductive health status.

Some companies offer data on children as young as two.

Others admit they’ve sold lists of 14- and 15-year-old girls for “family planning services.”

Surveillance disguised as protection

Let’s be clear: the internet is a minefield, filled with ways children can be tracked, profiled, or preyed upon. Protecting them is more important than ever.

One category of tools that’s exploded in popularity is the parental control app—software that lets you see everything happening on your child’s device:

The messages they send. The photos they take. The websites they visit.

The intention might be good. But the execution is often disastrous.

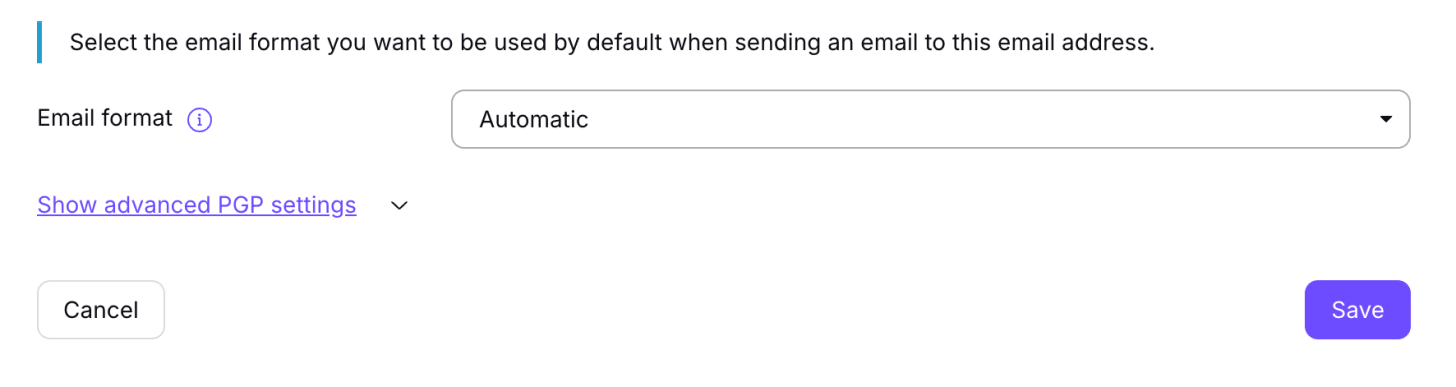

Most of these apps are not end-to-end encrypted, meaning:

- Faceless companies gain full access to your child’s messages, photos, and GPS

- They operate in stealth mode, functionally indistinguishable from spyware

- And they rarely protect that data with strong security

Again, how much do you know about these companies?

And even if you trust them, how well are they protecting this data from everyone else?

The “KidSecurity” app left 300 million records exposed, including real-time child locations and fragments of parent credit cards.

The “mSpy” app leaked private messages and movement histories in multiple breaches.

When you install one of these apps, you’re not just gaining access to your child’s world.

So is the company that built it… and everyone they fail to protect it from.

What these breaches really teach us

Here’s the takeaway from all these hacks and security failures:

Tech fails.

We don’t expect it to be perfect.

But when the stakes are this high — when we’re talking about the private lives of our children — we should be mindful of a few things:

1) Maybe companies shouldn’t be collecting so much information if they can’t properly protect it.

2) Maybe we shouldn’t be so quick to hand that information over in the first place.

When the data involves our kids, the margin for error disappears.

Your old phone might still be spying

Finally, let’s talk about hand-me-downs.

When kids get their first phone, it’s often filled with tracking, sharing, and background data collection from years of use. What you’re really passing on may be a lifetime of surveillance baked into the settings.

- App permissions often remain intact

- Advertising IDs stay tied to previous behavior

- Pre-installed tracking software may still be active

The moment it connects to Wi-Fi, that “starter phone” might begin broadcasting location data and device identifiers — linked to both your past and your child’s present.

Don’t opt them in by default: 8 ways to push back

So how do we protect children in the digital age?

You don’t need to abandon technology. But you do need to understand what it’s doing, and make conscious choices about how much of your child’s life you expose.

Here are 8 tips:

1: Stop oversharing

Data brokers don’t wait for your kid to grow up. They’re already building the file.

Reconsider publicly posting their photos, location, and milestones. You’re building a permanent, searchable, biometric record of your child—without their consent.

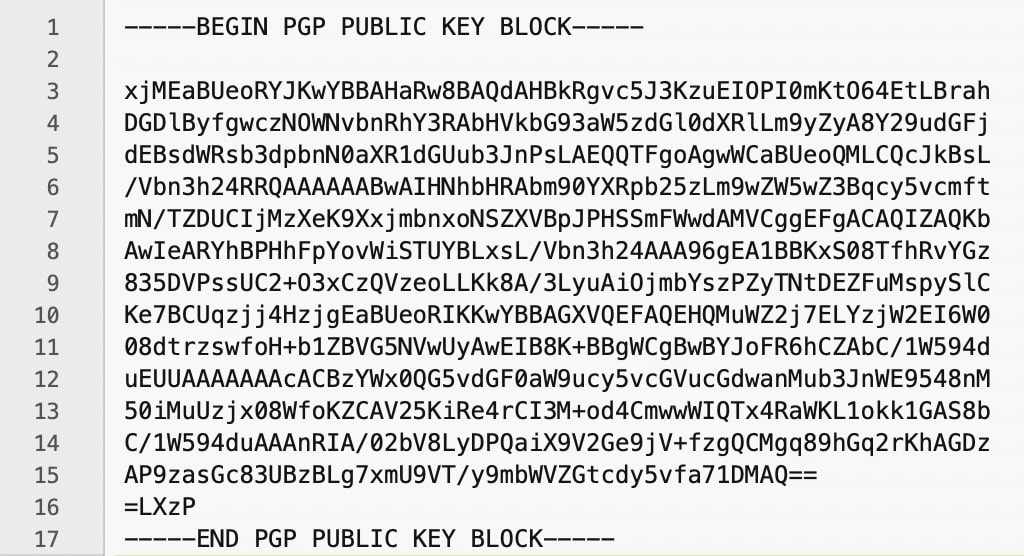

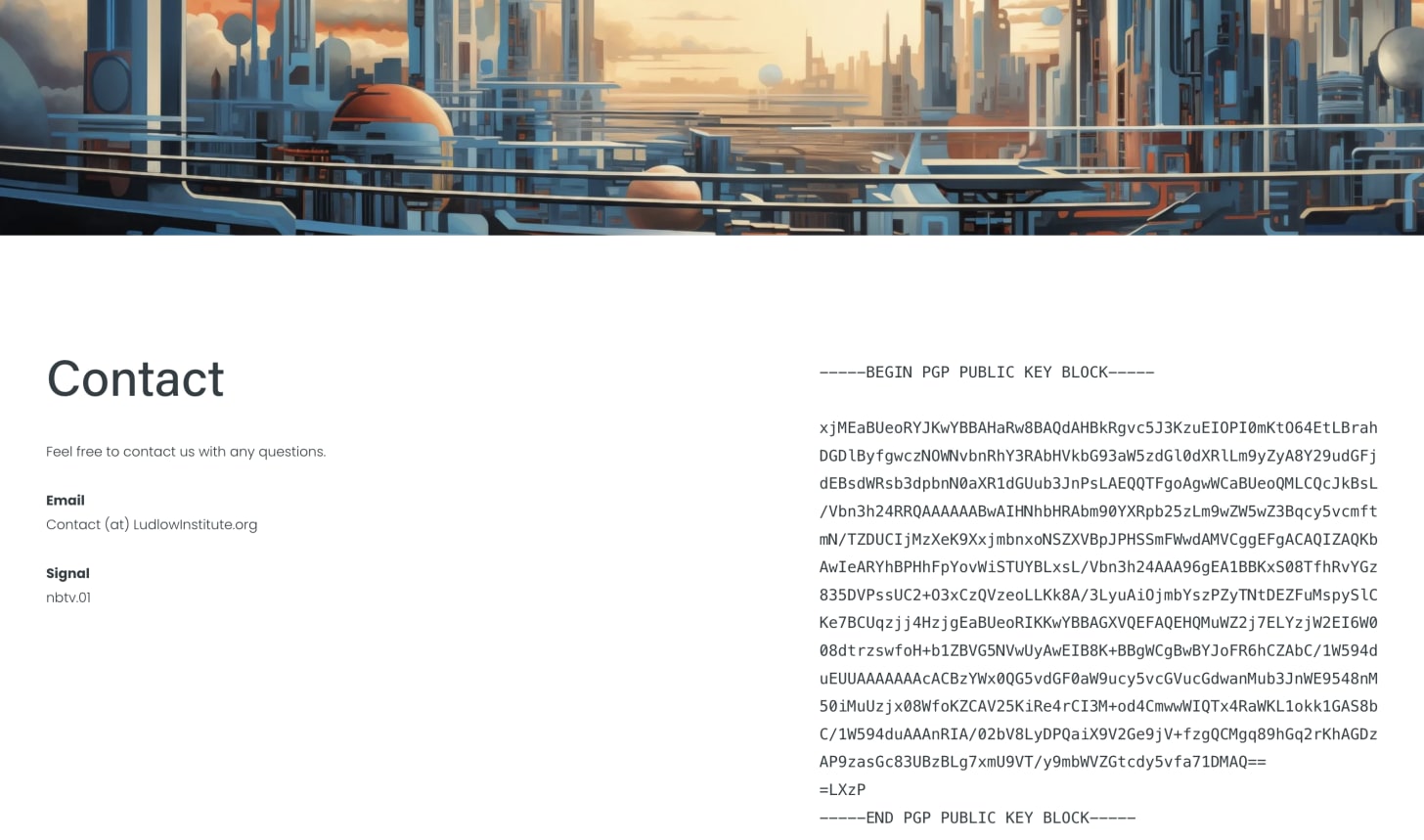

If you want to share with friends or family, do it privately through tools like Signal stories or Ente photo sharing.

2: Avoid spyware

Sometimes the best way to protect your child is to foster a relationship of trust, and educate them about the dangers.

If monitoring is essential, use self-hosted tools. Don’t give third parties backend access to your child’s life.

3: Teach consent

Make digital consent a part of your parenting. Help your child understand their identity—and that it belongs to them.

4: Use aliases and VoIP numbers

Don’t link their real identity across platforms. Compartmentalization is protection.

5: Audit tech

Reset hand-me-down devices. Remove unnecessary apps. Disable default permissions.

6: Limit permissions

If an app asks for mic or camera access and doesn’t need it—deny it. Always audit.

7: Set boundaries with family

Ask relatives not to post about your child. You’re not overreacting—you’re defending someone who can’t yet opt in or out.

8: Ask hard questions

Ask your school how data is collected, stored, and shared. Push back on invasive platforms. Speak up when things don’t feel right.

Let Them Write Their Own Story

We’re not saying throw out your devices.

We’re saying understand what they really do.

This isn’t about fear. It’s about safety. It’s about giving your child the freedom to grow up and explore ideas without every version of themselves being permanently archived, and without being boxed in by a digital record they never chose to create.

Our job is to protect that freedom.

To give them the chance to write their own story.

Privacy is protection.

It’s autonomy.

It’s dignity.

And in a world where data compounds, links, and lives forever, every choice you make today shapes the freedom your child has tomorrow.

Yours in privacy,

Naomi