Stable Diffusion is an AI model whose main task is to create images. It works in a way that is comparable to other generative AI models, such as ChatGPT, in that it transforms text prompts into visual images.

Stable Diffusion is a computerized tool that generates images based on text prompts. For example, if you give it the instruction “banana”, it will produce an image of a banana. It can also handle more complex instructions, such as creating an image of a banana in a specific artistic style.

Besides creating entirely new images, Stable Diffusion can also modify existing images by adding or replacing elements (a process called inpainting) or by expanding them to make them larger (a process called outpainting). These processes can be applied to any image, whether the original image was created using AI or not.

The Stable Diffusion model is open source, which means it is available for anyone to use.

How can AI generate images?

AI can generate images in several different ways, but Stable Diffusion uses a technique called the Latent Diffusion Model (LDM). It starts with a random noise, similar to the static noise on an analog TV. From the initial static image, it goes through several steps to remove noise from the image until it matches the text prompt. This is possible because the model was trained by adding noise to existing images, so it basically just reverses that process.

Stable Diffusion was trained on a large number of images from the internet, mainly from sites like Pinterest, DeviantArt and Flickr. Each image was provided with a text description, which is how the model learned to understand what different objects and styles look like.

What is Stable Diffusion used for?

Stable Diffusion can be used to create images based on text prompts and to modify existing images using the inpainting and outpainting processes. For example, it can create an entire image based on a detailed text description, or it can replace a small part of an existing image.

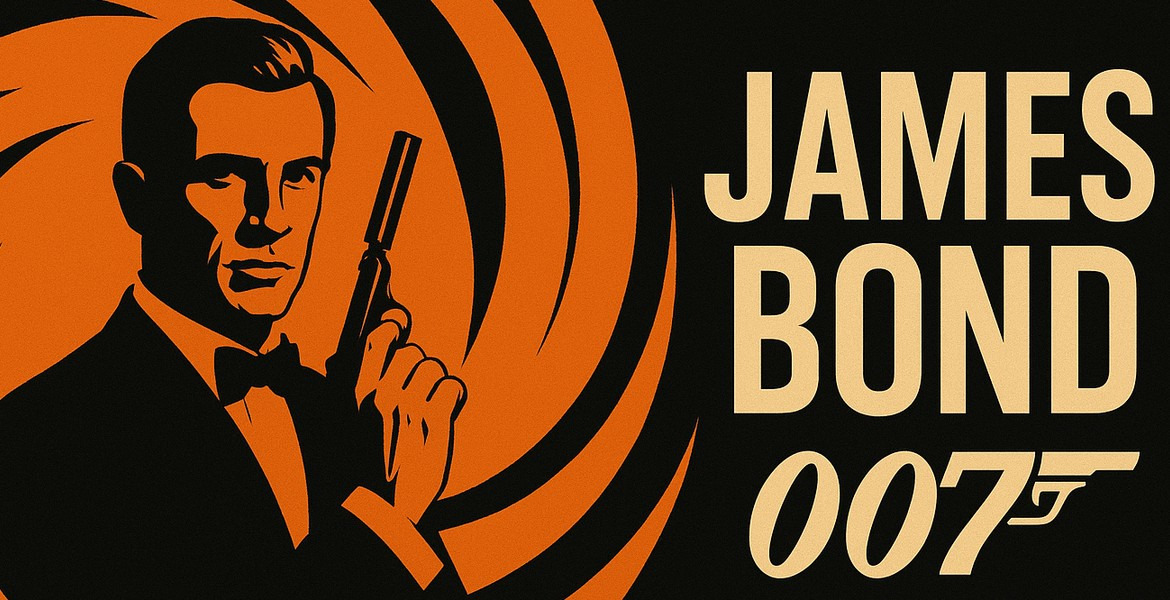

Stable Diffusion can create photo-realistic images that are difficult to distinguish from reality, but also images that are difficult to distinguish from hand-drawn or painted artwork. It can also produce images that are clearly fake depending on prompts and other factors.

Can you tell when an image is AI-generated?

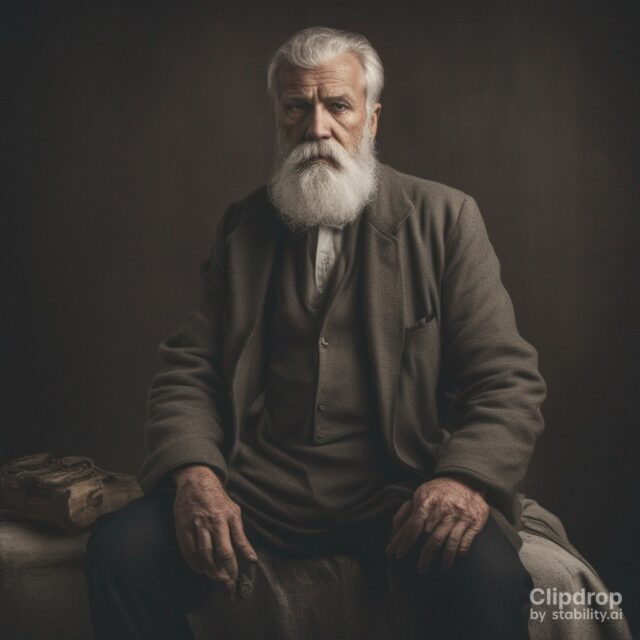

One way to detect AI-generated art is to look at the hands, as Stable Diffusion and other models have problems in this area. If the subject in an image clearly hides their hands, it’s a tip-off that someone has used some clever “prompt engineering” to get around the flaws in the AI model. However, AI models change incredibly quickly, so these flaws are likely to be short-lived.

Images generated by Stable Diffusion can theoretically be used for any purpose, but there are a number of pitfalls related to AI-generated content.

Because AI image generation must learn about objects from somewhere, its programmers have scoured the internet for art with metadata. They did so without the permission of the source art’s creator, which raises copyright issues.

This problem is particularly tricky because Stable Diffusion does not create its images from scratch, it assembles them from those it has studied. So both from learning and creating, it uses other artists’ work whether they have given permission or not. Sites like DeviantArt have only avoided mass exits by allowing users to opt out of letting AI systems use their art for training.

The rules surrounding copyright of works created in part by AI are also unclear, as copyright applications for works that included AI-generated elements have been rejected. Even so, AI-powered image generation threatens the livelihoods of traditional artists, who risk losing work to this cheaper, “easier” method.

How to try the tool for free

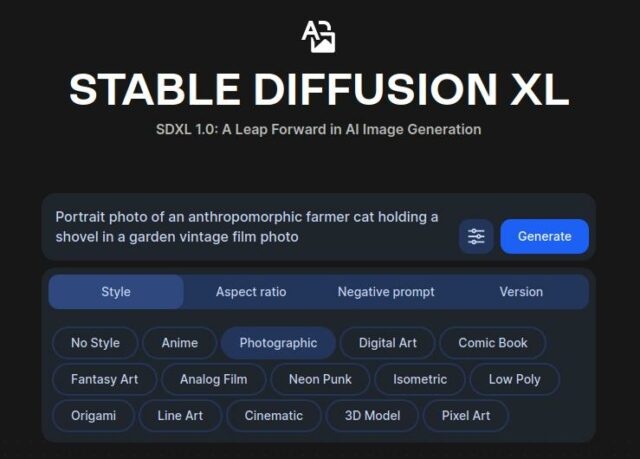

Stable Diffusion can be tried for free. Use the official link to the service.

Write a request or use a pre-written example, known as a prompt. Stable Diffusion will generate several different images. You can either download one of the suggestions or press the plus sign to request new suggestions.

By clicking on the settings icon, you can choose an image style such as photographic, cartoon or 3D models.

You can then try any of the other tools to remove the background from an object, expand, scale or transform the images. If you create a free account by entering an email address, you can generate up to 400 images per day for free.

Try it out and see if you’re more successful as an “AI artist” than with a brush in your drawer.

What is AI-based art?

The term 'AI-based art' covers technologies such as Stable Diffusion, Midjourney, DALL-E and other image creation tools that use natural language. Each technology may have different methods for learning and producing images, but they all fall under the category of 'AI-based art'.