Elon Musk’s AI company xAI has launched the third-generation language model Grok 3, which the company says outperforms competitors such as ChatGPT and Google’s Gemini. During a live presentation, Musk claimed that the new model is “maximally truth-seeking” and ten times more capable than its predecessor.

Grok 3, trained using 100,000 Nvidia H100 GPUs at xAI’s Colossus Supercluster in Memphis, USA, is described as a milestone in artificial intelligence. According to xAI, the model has a unique ability to combine logical reasoning with extensive data processing, which was demonstrated by creating a game that mixes Tetris and Bejeweled and planning a complex space journey from Earth to Mars during the presentation. Musk emphasized that Grok 3 is designed to “favor truth over political correctness” – a direct criticism of competitors he considers too censored.

Technical capacity and competitiveness

According to data from xAI, Grok 3 has outperformed GPT-4o and Google’s Gemini in academic tests, including doctoral-level physics and biology. The model comes in two versions: the full-scale Grok 3 and the lighter Grok 3 mini, which prioritizes speed over accuracy. It also introduces the DeepSearch feature, an AI-powered search engine that compiles information from across the internet into coherent answers.

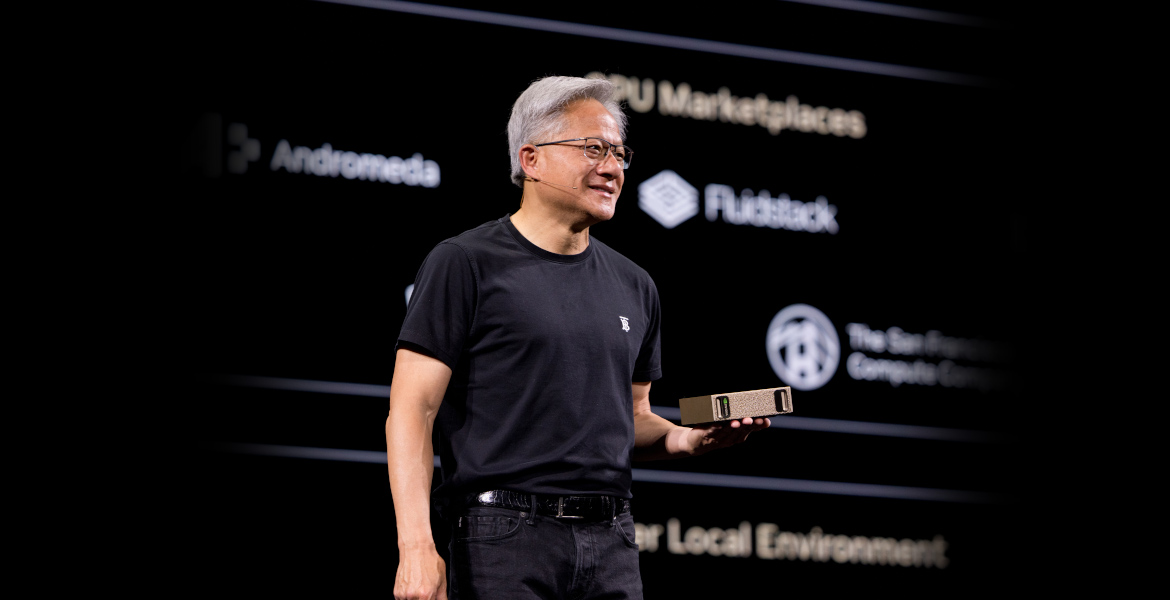

Early tests by experts such as Andrej Karpathy, former head of AI at Tesla, confirm that Grok 3 is at the forefront of logical thinking, but he also notes that the differences against competitors such as OpenAI’s o1-pro are marginal. Still, the development time is impressive: xAI built its supercomputer in eight months, compared to the industry standard of four years, according to Nvidia CEO Jensen Huang.

xAI and Elon Musk shocked the world with mind blowing Grok 3 demos yesterday.

And people are already doing wild use cases with it.

10 examples: pic.twitter.com/rCRsfp42NQ

— Min Choi (@minchoi) February 18, 2025

Availability and reviews

Grok 3 is first released to paying users of X (formerly Twitter) through the Premium+ subscription. A more expensive tier, SuperGrok, provides access to advanced features like unlimited image generation. However, Musk warned during the launch that the first version is a “beta” and may contain bugs – a call for patience.

Criticism of the launch has been harsh. Researchers and tech experts question xAI’s benchmark results, which they say are difficult to verify independently. Others point to risks of training AI on data from X, where misinformation and spam posts are common.

Some experts, such as AI researcher Findecanor, also criticize the name “Grok” – a term from science fiction describing deep understanding – saying it is misleading for a model that they say lacks genuine insight. In addition, Musk’s previous controversial statements about the potential dangers of AI have created skepticism about his own platform.

Vision for the future

Despite the criticism, xAI is betting big. The company plans to release Grok 2 as open source once Grok 3 is stabilized, which would allow community contributions to the technology. A voice feature and integrations for businesses via API are also in the works.

Meanwhile, a power struggle is underway in the AI industry. Musk recently tried to buy OpenAI for $97 billion, an offer rejected by CEO Sam Altman, who described it as an attempt to “destabilize” the competitor. With Grok 3, xAI is positioning itself as a key player in the global AI race – but the question is whether its promises can be fulfilled without increasing polarization around the ethics and trustworthiness of the technology.